Agile Testing Metrics that every tester must know | BrowserStack

Table of Contents

Before discussing the Agile Testing Metrics straight away, let’s tackle a few critical questions, such as What Agile Testing is, What are the software testing metrics, and the qualities of the “right” software testing metric to set a background of Agile Testing.

Mục lục

What is Agile Testing?

Agile testing has become a core part of the agile software development process. Testing was considered a separate stage tackled in previous software development methodologies after development was completed. However, in Agile, testing is continuous and starts at the beginning of the project, sometimes even before development. Agile testing seeks to provide a constant feedback loop as it goes hand in hand with development and aims to create a superior quality product by leveraging the principle of fail fast.

Furthermore, testers are not a separate organizational unit in agile testing and are now considered part of the “agile development team” itself. There are no dedicated testers or QA Engineers in many organizations, while in others, test specialists work closely with developers throughout the SDLC.

According to the Global Software Testing Services Market 2022-2026 report, the software testing market is poised to grow by $ 55.76 bn during 2022-2026, progressing at a CAGR of 15. The 15th State of Agile Report shows a significant growth in Agile adoption within software development teams, increasing from 37% in 2020 to 86% in 2021; it is evident that agile testing is now part and parcel of the SDLC for the foreseeable future.

Read More: How to run Regression Testing in Agile Teams

What are Software Testing Metrics?

A software testing metric is a measure to help track the efficacy and efficiency of Software QA activities. These establish the markers of success which are used to benchmark against each metric after finishing the actual process.

However, it is not easy to choose the right testing metrics. Often teams choose metrics that do not align with the requirements of the business at large. Without effective benchmarks, stakeholders cannot measure success and identify areas for improvement. These metrics are helpful to track progress, skill level, and task-based success even within teams.

Thus all QA Leaders must set the “right” testing metrics for their teams to follow.

What are the qualities of the “right” testing metric for Agile teams?

Some of the commonly-agreed characteristics of the “right” agile testing metric are as follows:

- Essential to business objectives and growth: A metric should reflect the company’s primary growth objective. It can be month-on-month revenue growth or the number of new users acquired for a new company. For a more mature organization, it can be customer churn. Since the business objectives will vary from company to company and project to project, the metrics should also be unique.

- Allows improvement: Every metric chosen should allow room for improvement. These should ideally be incremental and pegged against an ideal value after starting from a baseline value. For eg, month-on-month revenue growth is an incremental metric. If a metric (such as customer satisfaction) is already at 100%, the goal might be to maintain that status.

- Opens the way for a strategy: A metric should not be calculated just for measurement. Once a metric sets a team’s goal, it also inspires them to ask relevant questions to formulate a plan.

- Easily trackable and explainable: The final hallmark of a good metric is that it should not be difficult to explain and should be as intuitive as possible.

Read More: Essential Metrics for the QA Process

Testing Metrics for Agile Testing that every tester must know

Software testing has evolved significantly from the days of the waterfall software development model. With an increasing induction of Agile methodologies, several key metrics used by the QA teams of old, including the number of test cases, are irrelevant to the bigger picture. Thus, it is important to understand which metrics are vital to improving software testing in an Agile SDLC.

Before enlisting the metrics themselves, it is interesting to transition from Waterfall to Agile testing metrics and see how vital Agile development metrics are repurposed by QA personnel to quantify the testing activity and measure software quality.

In the Waterfall model of yore, QA was separated from software development and performed by a specific QA team. The waterfall model was non-iterative and required each stage to be completed before the next could begin. An independent QA team would often define the test cases to determine if the software met the initial requirement specifications.

The QA Dashboard would focus on the software application and measure four key dimensions that are:

- Product Quality: The number and rate of defects utilized to measure software quality.

- Test Effectiveness: Code Coverage was used to provide an insight into test effectiveness. Also, QA focussed on requirements-based and functional tests, and these reports would also be used to measure the efficacy.

- Test Status: This would report the number of tests run, passes, blocked, etc, and provide a snapshot of the status of the testing

- Test Resources: This would record the time taken to test the software application and the cost.

However, modern Agile development relies on collaborative effort across cross-functional teams. Thus, the above metrics have become less relevant as they fail to be relevant to the bigger picture for the team. Also, with testing and development becoming concurrent, it is imperative to use metrics that reflect this integrated approach. The unified goals and expectations of the Agile teams comprising both developers and testers help create new metrics that aid the whole team from a unified POV.

Generally, all Agile Test metrics can be classified into:

- Type 1: General Agile metrics adapted to be relevant to software testing.

- Type 2: Specific test metrics applicable to the Agile Development Environment.

These are described in some detail below:

Type 1 Agile Metrics

Sprint Burndown

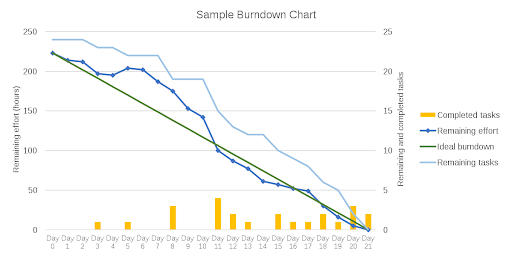

Sprint Burndown charts are compulsorily used by Agile teams to graphically depict the rate at which tasks are completed and the amount of work remaining during a defined sprint.

Its relevance to Agile testing is as follows:

Since all completed tasks for an Agile sprint must have been tested, it can double up as a measure of % testing done. Also, The definition of done can include a condition such as “tested with 100 percent unit test code coverage”.

Number of Working Tested Features / Running Tested Features

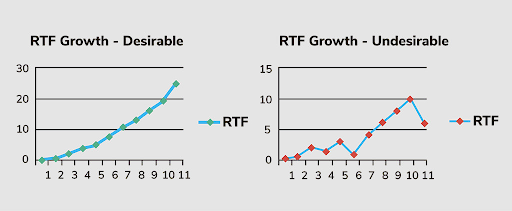

The Running Tested Features (RTF) metric indicates the number of fully developed software features that have passed all acceptance tests, thus becoming implemented in the integrated product.

Its relevance to Agile testing is as follows:

The RTF metric measures only those features that have passed all acceptance tests. Thus providing a benchmark for the amount of comprehensive testing concluded. Also, more features shipped to the client translate well into the parts of software tested.

Velocity

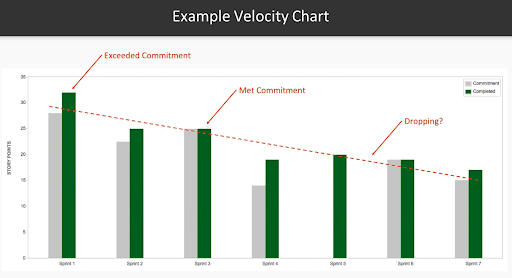

Velocity is an approach for measuring how much work a team completes on average during each sprint, comparing the Actual Completed Tasks with the team’s estimated efforts.

Agile test managers can use this to predict how rapidly a team can work towards a specific goal by comparing the average story points or hours committed to and completed in previous sprints.

Its relevance to Agile testing is as follows:

The quicker a team’s velocity, the faster it produces software features. Thus higher velocity can speed up the completion of software testing.

Read More: How to Accelerate Product Release Velocity

Cumulative Flow

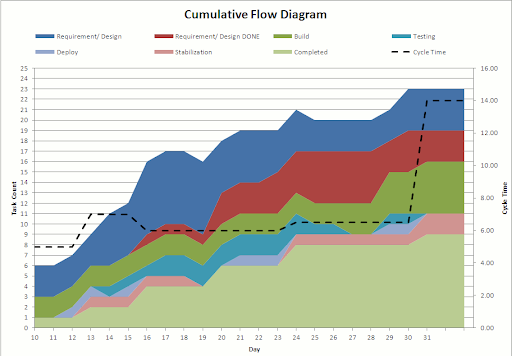

The Cumulative Flow Diagram (CFD) provides summary information for a project, including work-in-progress, completed tasks, testing, velocity, and the current backlog.

It allows the visualization of bottlenecks in the Agile process. Colored bands that are disproportionately fat represent stages of the workflow for which there is too much work in progress. Thin bands represent stages in the process that are “starved” because previous stages are taking too long.

Its relevance to Agile testing is as follows:

As a mandatory part of the Agile workflow, testing is included in all CFDs. CFDs may be used to analyze whether testing is a bottleneck or whether other factors in the CFD are bottlenecks that might affect testing.

Earned Value Analysis

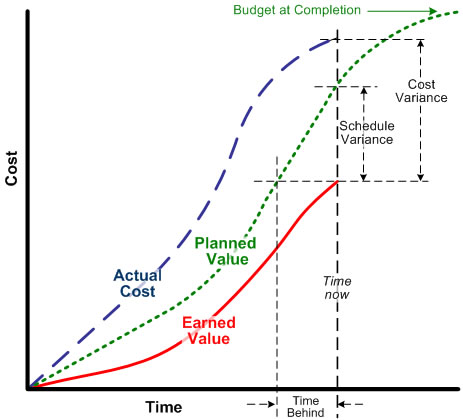

Earned Value Analysis (EVM) encompasses a series of measurements comparing a planned baseline value before the project begins with actual technical progress and hours spent on it. This is typically in the form of a dollar value and requires incorporating story points to measure earned value and planned value.

EVM methods can be used at many levels from a single task to the total project. It ends up looking something like this

Its relevance to Agile testing is as follows:

- EVM techniques in Agile can be used to determine the economic value of the software testing process

- EVM methods help to understand whether the software tests are cost-effective by comparing the planned value with earned value at the single task level.

Type 2 Agile Metrics

Unlike the previous metrics, which were standard Agile metrics repurposed to suit Agile Testing, the ones below are intended for specific purposes.

Percentage of Automated Test Coverage

It measures the percentage of test coverage obtained through automated testing. More tests should get automated with time, and ideally higher test coverage is expected.

It is critical for Agile teams because automation testing is one of the primary ways to ensure high test coverage. Test cases will only increase with added functionality at every sprint. Also, test coverage can serve as a basic measure of risk. The more test coverage attained, the lower the chances of production defects.

Read More: How do you ensure maximum test coverage?

Code Complexity & Static Code Analysis

Code complexity is calculated through cyclomatic complexity. It counts several linearly independent paths through a program’s source code, Static code analysis, and uses tools to examine the code without code execution. This process can unearth issues like lexical errors, syntax mistakes, and sometimes semantic errors.

Agile teams need to create simple, readable code to reduce defect counts, check the code structure and ensure it adheres to industry standards such as indentation, inline comments, and correct use of spacing.

Defects Found in Production/Escaped Defects

This counts the flaws for a release found after the release date by the customer instead of the QA team. These tend to be quite costly to fix, and it is crucial to analyze them carefully to ensure their reduction from a baseline value.

Agile teams can ensure continuous improvement in testing by defining the root cause of escaped defects and preventing their recurrence in subsequent releases. These can be represented per unit of time, per sprint, or release, providing specific insights into what went wrong with development or testing in a specific part of the project.

Defect Categories

It’s not enough to find defects and categorize them to obtain qualitative information. A software defect can be categorized into functionality errors, communication errors, security bugs, and security bugs. Pareto charts can represent these and identify the defect categories to improvise in subsequent sprints.

-

Defect Cycle Time

This measures the time taken between starting work on fixing a bug and fully resolving it. As a rapid resolution of defects is critical for product release velocity, reducing the defect cycle time should be a priority for all Agile teams.

-

Defect Spillover

It’s the number of unresolved defects in a particular sprint or iteration. Measuring spillover minimizes the chances of teams getting stuck in the future because of a build-up of technical debt and provides an idea of the team’s efficiency as a whole in dealing with such defects.

Read More: Defect Management in Software Testing

Conclusion

The Agile SDLC is unique from the legacy Waterfall models of yore. Thus figuring out the correct agile testing metrics and utilizing them to ensure software quality should be the watchword for any QA Leader worth their salt. QA metrics in Agile processes are critical since managers have to pay close attention to the most minute sprint goals. Refined and specific metrics help testers stay on track with their numbers.

The entire QA process hinges on the use of a real device cloud. It is impossible to identify every possible bug a user may encounter without real device testing. Naturally, undetected bugs cannot be tracked, monitored, or resolved. Moreover, QA metrics cannot set baselines and measure success without procuring accurate information on bugs. It stands true for manual testing as well as automation testing.

BrowserStack’s real device cloud provides 3000+ real browsers and devices for an instant, on-demand testing. The cloud also provides integrations with popular CI/CD tools such as Jira, Jenkins, TeamCity, Travis CI, etc. Additionally, built-in debugging tools let testers identify and resolve bugs immediately. BrowserStack also facilitates Cypress testing on 30+ browser versions with instant, hassle-free parallelization.

Try BrowserStack for Free