How to develop a successful quality assurance framework – EvaluAgent

Blogs

Mục lục

How to develop a successful quality assurance framework

One of the biggest issues for growing customer service teams is the inability or failure to measure and manage the quality of service and conversation that front-line advisors are having with customers.

It is easy to focus on performance metrics such as call answer times as they are readily available from systems, but while the quality of conversation is arguably more important, how to measure quality assurance is not as simple.

Run a quick google search, and you’ll find thousands of scorecard templates that you could download. One of these may be a good starting point, but it is crucial to ensure that this is adapted to meet your specific needs.

In this blog, I will explain how to develop a quality framework an approach that will deliver real improvements to your QA process, your team, your organisation and your customers by developing a quality scorecard that measures, manages and supports the delivery of a high standard of service.

The quality of service is of critical importance for contact centres as it is an accurate indicator of performance relating to the customer. Measuring quality and customer perception of service are two of the most used metrics in managing performance in successful operations, so it is critical that the measurement is itself of a high quality.

Creating a Quality Framework. Where should you start?

In Contact Centres the people that make things happen are Team Leaders. A good Team Leader will know the strengths and weaknesses of each of their team and will be able to guide and motivate them to deliver their best. They have an organisational responsibility to meet the corporate objectives but also provide the bridge between the company and individual Advisors. Yet for many Team Leaders, they lack the required tools and processes. Having an insight and overview of the quality of service provided by each member of their team is essential to support the development of agents.

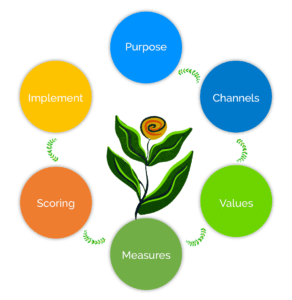

When I get asked, “How do I create a Quality Assurance framework” by call centres, a six-stage process is typically recommended.

This includes:

- Purpose

- Channels

- Values

- Measures

- Scoring

- Implement

Step 1. Understand the purpose

The first area to address when building scorecard or framework is the purpose of QA; what are we measuring and why?

It is often the case that measuring quality is about developing a score to report as a KPI, but quality scorecards can and should deliver so much more than this.

When Quality is used correctly, it can be an effective tool to:

- Measure compliance with regulations

- Assess adherence to policy

- Measure the customer experience

- Monitor Advisors performance

- Change Advisors behaviour

There needs to be clarity about what the scorecard is going to be used for and then ensure that the design meets these objectives.

Step 2. Identify the Channels

Call Recording Quality Management (CRQM) was originally developed around call scoring and reviewing the telephone call recordings. In today’s multi-channel environments, some Advisors may not handle telephone calls. Instead many may deal exclusively with Live Chat, email, social media or a wide range of channels.

Customers may never interact with an organisation over the telephone, but the quality of the engagement still needs to be measured. Different channels have different components, and therefore the measures need to be adapted.

For example

Live Chat will involve a written discussion, so the quality of spelling and the correct use of grammar is a critical measure of quality.

vs.

An email is still a written interaction but is usually more formal than Live Chat so a different evaluation of style will be required.

Step 3. Align with Company Objectives and Brand Values

The objectives of the contact centre and the values of the brand should be incorporated into the design of a scorecard. There are thousands of scorecards available to download, but the type of organisation and the value of the services that it provides will impact the points of assessment and the scoring.

For example:

- A high-value sales organisation would require different scoring criteria to a low-value service.

- In Financial Services and other regulated sectors, there is a requirement for particular terminology and wording to be used, but this is not the case in all industries.

- Inbound communications will have a different context to outbound where a different level of introduction will be required.

All of these factors make a difference to the scoring criteria and often affect how Advisors operate and behave.

Step 4. Develop Specific Measures

The previous three steps highlight some of the factors involved in the design of a scorecard. Using the information gathered so far as a baseline, the next step is to map out what the measurements may be, what are the specifics that need to be assessed and measured.

These may be varied and cover a wide range of areas, but we advise that the overall composition of the scorecard be simple to use. This could result in the creation of sections to house questions that relate to one another.

For example:

- Compliance

- Customer Experience

- Process

Within each of these areas, there may be several specific measures that contribute to a rating for that area.

Of real interest is the development of measures from step 1 which relate to a mixture of mandatory or discretionary components of a contact.

For example, if the contact relates to a regulated area, then it may be compulsory to state a specific phrase. If this is the case, then that should be a specific measure or contribute to a particular compliance measure.

Struggle to get the right balance within your existing quality assurance framework & scorecard? Check out another one of our blogs.

Step 5. Identify Scoring

The scoring is the most contentious area of scorecards as they can be both objective and subjective.

Where there are specific compliance matters, then the outcome is simple; yes or no. However, when the issue is more subjective, i.e. about building rapport, then you could use a range of different scoring templates and outcomes such as a three, four or ten point scale.

TYPESCORENOTES Binary Yes / No Used where clear compliant/non-compliant is easily measured.R.A.GRed, amber, green Where a measure can be partially met in addition to Yes/NoScaleRange or scale such as 1-10A range of scores enable a more subjective measure to be applied e.g. 7 = met the requirement but could improve.

For example:

The call closure after a conversation with a disgruntled customer that is closing their account. Asking them if there is anything else that you can help them with may not be appropriate, and therefore this element should be removed from the call scoring for this interaction or have the option to score the question as N/A (Non-applicable).

It is also the case that some areas of scoring are more important than others so consider weighting questions accordingly to reflect this. The example of compliance reflects this as this will be of much greater importance when in a regulated environment than having the correct call closure.

Top tips: Do not overcomplicate a scorecard; it should be easy to complete, review and understand.

Step 6. Implementation

The final stage in developing the scorecard is planning the implementation. Introducing a new quality framework or any form of assessment can be a concern for Advisors who will be suspicious of them ‘being checked up on’. It is essential to involve Advisors in the development of the scorecard at all stages and to launch it properly.

A launch should include:

- Active involvement from Advisors in the development

- Communication with all stakeholders about the purpose and objectives including how it will be used

- Explanation about the questions and scoring criteria

- Briefing about the benefits to the organisation, customers and Advisors

- A pilot process to test the scorecard.

When implementing in large teams or when multiple Evaluators are involved then it will also be essential to introduce a calibration process. There is a potential issue where one Evaluator may score differently to others. Having the ability to check this by sharing completed scorecards and ensuring that the scoring is equal is vital in developing a fair and consistent approach.

Calibration discussions also help with the development of Evaluators and Team Leaders too. This can be done by asking different members or the QA team to undertake the same assessment or through Group sessions where multiple stakeholders score the same interaction.

For more best practice on Calibration, check out our webinar: The six common challenges of Calibration and how to overcome them.

What now?

Building a great scorecard is one part of the puzzle, but it can quickly become just another tick-box exercise with little purpose. Feedback to Advisors is important and an essential part of managing and improving all areas of performance. Feedback should be shared to advisors in real-time and regular one to ones should be scheduled where agents and their line managers have the chance to discuss their overall performance, learning and development.

Having data from regular assessments will enable Team Leaders and Managers to build a profile of the individual team members but also the overall team. Analysing this information will start to highlight trends which in turn will enable prompt action to be taken to resolve issues.

It is interesting to observe how quickly bad practice starts and spreads through a Contact Centre and early intervention is possible with ongoing analysis. Similarly, if the majority of the team are performing poorly in the same area, then additional team training may be delivered to resolve and improve the issue.

Quality scorecards are an aid to measuring and improving performance. They’re challenging to get right, but by following these steps, you will have a much better chance.

To leave you with one top tip:

Review your scorecard at least every six months. Is it working and meeting your requirements? Has it adapted to your changing business? Is it helping to improve the quality of performance?

By Tom Palmer

Tom is EvaluAgent’s Head of Digital and takes the lead on developing and implementing our digital and content management strategies which results in creating a compelling, digital-first customer marketing experience.